mxink

Adding MX Ink Support to a Unity App

There are three possible approaches to integrate MX Ink into a Unity app:

Additional information

Developing for MX Ink using the Unity OpenXR plugin

This approach relies on the Unity OpenXR plugin and the Unity XR Input System.

The MX Ink OpenXR Sample App (source code, apk) is a Unity project that shows how to use the prefabs from the MX Ink OpenXR unity package to create a basic drawing app.

Get the MX Ink OpenXR Unity integration package here:

The following is a more detailed explanation of the configuration requirements and the basic functionality demonstrated in the sample app.

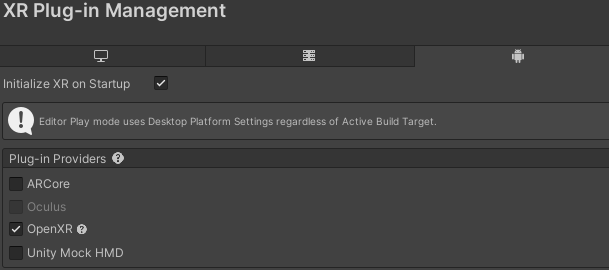

Your application should use Unity’s OpenXR plugin, enable it in the XR Plug-in Management settings:

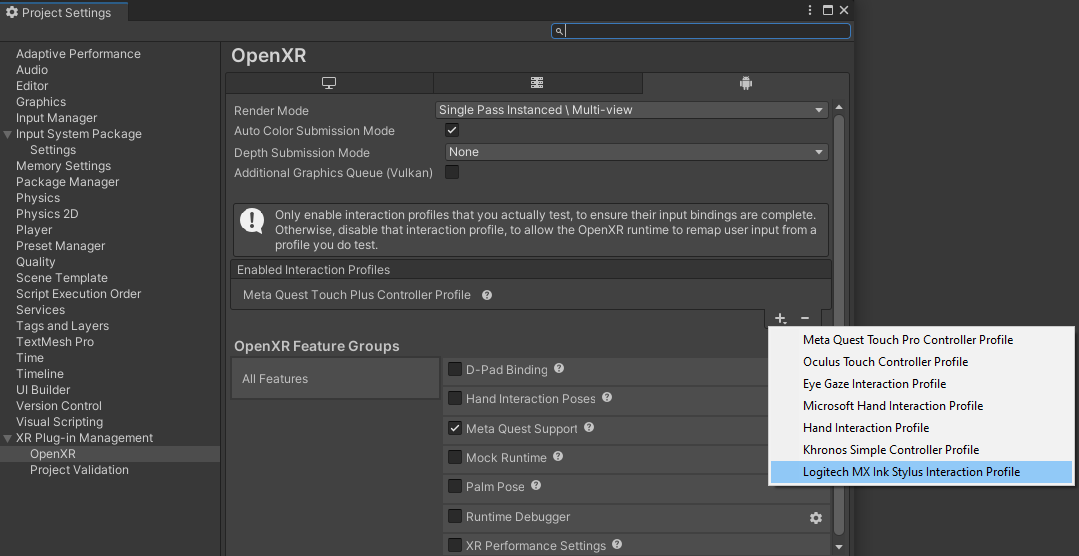

Import the MX Ink OpenXR unity package into your project. This will add support for the MX Ink OpenXR interaction profile, enable it in the OpenXR plug-in settings:

The MX Ink OpenXR integration package includes an example of input actions mapping that is used in the MX_Ink prefab.

MX Ink inputs are mapped to input actions, that can be declared as follows:

private InputActionReference _tipActionRef;

private InputActionReference _grabActionRef;

private InputActionReference _optionActionRef;

private InputActionReference _middleActionRef;

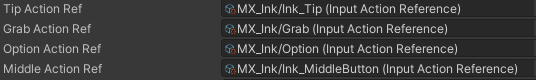

The input action references are configured in the Unity editor:

Enable the input actions, this can be done in the Awake method:

_tipActionRef.action.Enable();

_grabActionRef.action.Enable();

_optionActionRef.action.Enable();

_middleActionRef.action.Enable();

This is how the MX Ink inputs can be retrieved in the Update method:

_stylus.inkingPose.position = transform.position;

_stylus.inkingPose.rotation = transform.rotation;

_stylus.tip_value = _tipActionRef.action.ReadValue<float>();

_stylus.cluster_middle_value = _middleActionRef.action.ReadValue<float>();

_stylus.cluster_front_value = _grabActionRef.action.IsPressed();

_stylus.cluster_back_value = _optionActionRef.action.IsPressed();

The MxInkHandler.cs script is part of the MX_Ink prefab and implements the code described above. These are the basic components of the scene in the sample app:

In addition to retrieving pose and inputs from the MX Ink Stylus, you can also generate haptic pulses as follows:

var device = stylusIsOnRightHand ? UnityEngine.XR.XRNode.RightHand : UnityEngine.XR.XRNode.LeftHand;

var stylus = UnityEngine.XR.InputDevices.GetDeviceAtXRNode(device);

stylus.SendHapticImpulse(0, amplitude, duration);

The code above requires knowing to which hand the stylus is currently assigned. Stylus handedness can be configured in the Stylus Settings in the Meta OS.

In an application, You can detect the hand where the stylus is active by subscribing to the DeviceConnected event:

...

InputDevices.deviceConnected += DeviceConnected;

...

private void DeviceConnected(InputDevice device)

{

Debug.Log($"Device connected: {device.name}");

bool mxInkConnected = device.name.ToLower().Contains("logitech");

if (mxInkConnected)

{

stylusIsOnRightHand = (device.characteristics &

InputDeviceCharacteristics.Right) != 0;

}

}

See the MX Ink OpenXR Sample App (apk) for more details about how to use MX Ink to draw in-air, using the middle button, and on 2D surfaces using the pressure sensitive tip.

Developing for MX Ink using Meta Core SDK

For this approach, your application will need to be built using the Oculus plugin, so make sure to enable Oculus in the XR Plug-in Management settings.

The Meta Core SDK v68.0.2 introduced new API methods enabling developers to manage any controller with an associated OpenXR Interaction Profile. Starting with Meta OS v68, the MX Ink Interaction Profile is supported by the Meta OpenXR runtime.

Retrieving data from MX Ink through its corresponding Interaction Profile provides access to all stylus inputs, including the ‘docked’ flag, which is activated when the stylus is placed in the MX Inkwell (charging dock). More importantly, this allows for a reliable method to detect when MX Ink is active, enabling applications to adapt their UI/UX accordingly.

Using the MX Ink OpenXR Interaction Profile in a Unity application

The MX Ink Unity Integration Package for Meta Core SDK includes the code described below and includes the prefabs required to display a 3D model of the MX Ink stylus and a simple drawing script.

Get the MX Ink Unity Integration Package with support for Meta Core SDK here:

This MX Ink Sample App is a Unity project that shows how to use the prefabs from the integration package to create a basic drawing app.

Follow these steps to manually add MX Ink support to an existing project:

- Install/update the Meta Core SDK in your Unity project using the Package Manager: Window -> Package Manager. Be sure to select the latest version (v68.0.2 or more recent).

-

Download the

MxInkActions.assetfile and add it to your Assets folder.This file lists all of the input/output fields from the MX Ink Interaction Profile. You can access such data in your code using the short names associated to each field.

Add the

MxInkActionsto the Input Action Sets in your Meta XR settings: Edit -> Project Settings… -> Meta XR -> Input Actions -

Use the following methods to retrieve the pose and inputs from the stylus, and also to trigger haptic feedback:

The following code fragments should be called from the

Update()method of a script that would have the stylus 3D model as a child.Retrieving Stylus Pose

This is the position of the stylus tip:

string MX_Ink_Pose = "aim_right"; if (OVRPlugin.GetActionStatePose(MX_Ink_Pose, out OVRPlugin.Posef handPose)) { transform.position = handPose.Position.FromFlippedZVector3f(); transform.rotation = handPose.Orientation.FromFlippedZQuatf(); }Reading Stylus Inputs

OVRPlugin.GetActionStateFloat("tip", out stylus_tip_value); OVRPlugin.GetActionStateBoolean("front", out bool stylus_front_button); OVRPlugin.GetActionStateFloat("middle", out stylus_middle_value); OVRPlugin.GetActionStateBoolean("back", out bool stylus_back_button) OVRPlugin.GetActionStateBoolean("dock", out _stylus_docked)Triggering Haptic feedback

OVRPlugin.TriggerVibrationAction("haptic_pulse", OVRPlugin.Hand.HandRight, duration, amplitude);Where

durationis the number of seconds to keep haptic feedback.amplitudeis a normalized value where1.0is the strongest vibration that can be produced by the device.Detect when MX Ink stylus is active

To determine whether the MX Ink stylus is active, you can obtain the names of the interaction profiles associated with the devices currently linked to the left and right hands:

var leftDevice = OVRPlugin.GetCurrentInteractionProfileName(OVRPlugin.Hand.HandLeft); var rightDevice = OVRPlugin.GetCurrentInteractionProfileName(OVRPlugin.Hand.HandRight);The Quest 3 touch controller interaction profile name is:

/interaction_profiles/meta/touch_controller_plusThe MX Ink interaction profile name is:

/interaction_profiles/logitech/mx_ink_stylus_logitechBy utilizing the code provided above, you can ascertain not only if the MX Ink stylus is active but also the hand to which it is assigned. This way, you can switch 3D models and tailor the UI/UX of your application to suit the active device. For a comprehensive example, refer to the

VrStylusHandler.csscript in the sample code.

Legacy Touch Controller Mappings

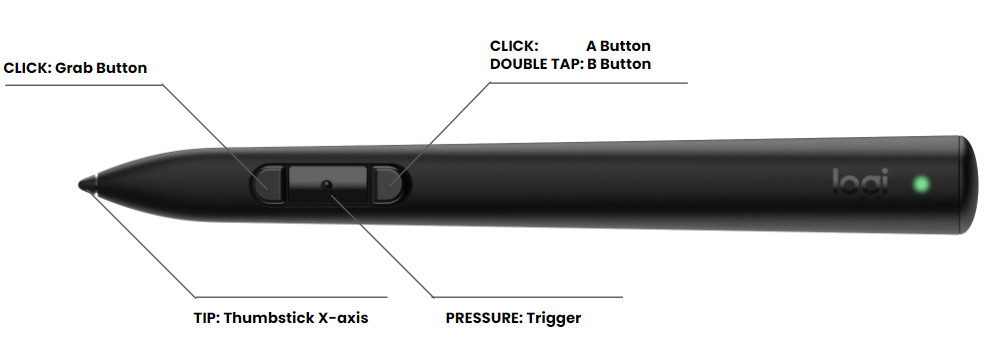

If an application does not support the stylus interaction profile, the stylus inputs can be still read as legacy Touch Controller inputs. The legacy mappings are shown in the diagram below.

This approach of using the legacy Touch Controller mapping is not recommended. Please be aware that as of Meta OS v69 (and later), there are several known issues to consider when using this approach:

- The stylus pose retrieved in Unity will have an offset of approximately 5.5 cm on the z-axis. Your app should account for this to ensure accurate positioning of the stylus 3D model and other interactions.

- The tip value is reported as the thumbstick x-axis, with a ‘deadzone’ of 10%. This means the stylus tip will not be as sensitive as it could be when using the MX Ink Interaction Profile, as described in the previous section.

- Applications that have not been updated to support MX Ink may not work as expected, as some interactions will not be possible due to the different input capabilities of the stylus compared to the Touch Controller. For example, MX Ink has no thumbstick or B button.